Continuing my exploration of using a locally hosted Ollama on my Linux desktop computer, I have been doing a lot of reading and research. Today, while having lunch with a university professor, he asked me some questions I didn’t have an immediate answer to. So, I went back to my research to find the answers.

My computer is a Linux desktop with an 11th-generation Intel Core i7-1165G7 processor and 64 gigabytes of RAM. Until today, I have been interacting with Ollama and several models, including Gemma, Codegemma, Phi-3, and Llama3.1, from the command line. Running the Ollama command-line client and interacting with LLMs locally at the Ollama REPL is a good start, but I wanted to learn how to use Ollama in applications and today I made a good start.

Python is my preferred language, and I use VS Codium as my editor. First, I needed to set up a virtual Python environment. I have a ‘Coding’ directory on my computer, but I wanted to set up a separate one for this project.

$ python3 -m venv ollamaNext, I activated the virtual environment:

$ source ollama/bin/activate

Then, I needed to install the ‘ollama’ module for Python.

pip install ollama

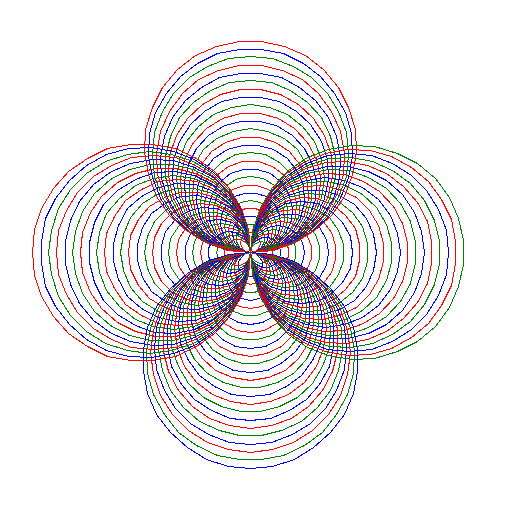

Once the module was installed, I opened up VSCodium and tried the code snippet. I found that I used the ‘ollama list’ command to make sure that ‘codegemma’ was installed. Then I used a code snippet I found online and tailored it to generate some Python code to draw a circle.

import ollama

response = ollama.generate(model='codegemma', prompt='Write a Python program to draw a circle spiral in three colors')

print(response['response'])

The model query took some time to occur. Despite having a powerful computer, the lack of a GPU significantly impacted performance, even on such a minor task. The resulting code looked good.

import turtle

# Set up the turtle

t = turtle.Turtle()

t.speed(0)

# Set up the colors

colors = ['red', 'green', 'blue']

# Set up the circle spiral parameters

radius = 10

angle = 90

iterations = 100

# Draw the circle spiral

for i in range(iterations):

t.pencolor(colors[i % 3])

t.circle(radius)

t.right(angle)

radius += 1

# Hide the turtle

t.hideturtle()

# Keep the window open

turtle.done()

You should try playing with the json format option for Ollama next. That is where the power of Ollama in Python really shines IMHO

Thanks for the idea. I’m learning more every day. Are you an experienced Ollama user? I’m curious to learn more.

My experience is measured in weeks. I only learned how to use the format option earlier this week, but it works a lot nicer than the attempts at Regex I had before then.

I’ll be publishing it (in a bodgey state) on my github withon the next two days.

Essentially, it forces the model to output in JSON. Currently (if I understand correctly) it does not force it to output in the JSON you specified, but it is better then running REGEX on the output like your other options are