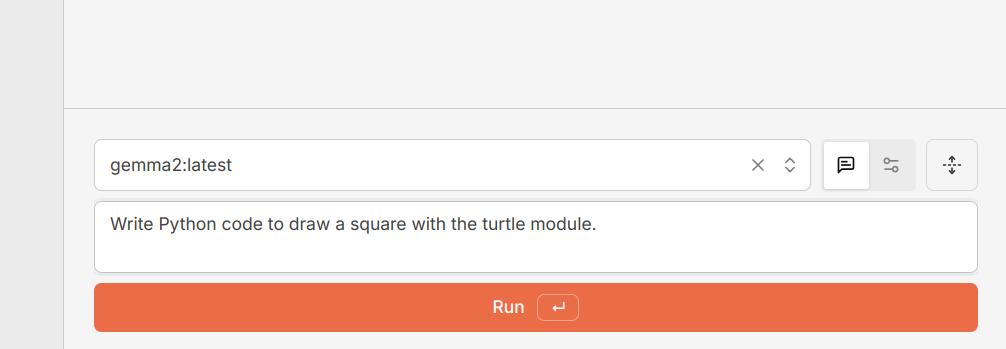

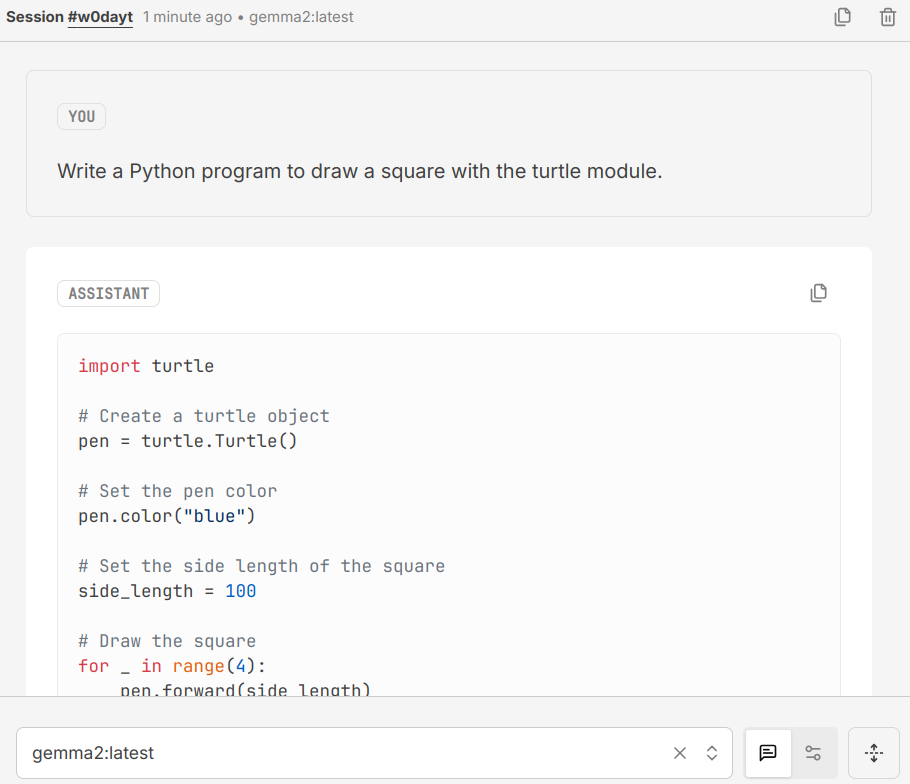

Mastodon is an open-source social networking platform for microblogging. While it has a web-based interface, many users prefer to use a client to access Mastodon. Clients are programs or applications that allow users to access and interact with the Mastodon platform from various devices, including computers, tablets, and phones. I moved to Fosstodon in 2019, and it has become my primary social networking site.

Web Interface

Like most users, I started using the Mastodon web app by pointing my browser at joinmastodon.org. I found an instance to join, created an account, and logged in. I used the web app to read, like, and reblog posts from my favorite Mastodon users. I also replied to posts without ever having to install anything locally. It was a familiar experience based on other social media websites.

The disadvantage of the web app is that it lacks the richness of a dedicated Mastodon client. Clients provide a more organized and streamlined interface, which makes it easier to navigate, manage notifications, and interact with others in the fediverse. Clients also make it easier to find and generate useful hashtags, which are essential to sharing your message in a non-algorithm-driven environment.

Mastodon is open source, though, so you have options. In addition to the web apps, there are a number of Mastodon clients. According to Mastodon, there are nearly sixty clients for Mastodon available for desktop, tablet or phone.

Clients

Each client app has its own unique features, UI design, and functionality. But they all ultimately provide access to the Mastodon platform:

I started my client journey with the Mastodon app for iOS. The app is easy to use and is open source. The app is written in Swift. It is the official iOS app for Mastodon.

I moved to MetaText which is no longer being developed. I liked the Metatext interface. It made interacting with Mastodon easier on my iPhone. Metatext is open source with a GPL v3 license.

I am currently using Ice Cubes which is my favorite Mastodon app for both iOS and MacOS. Ice Cubes has everything I was looking for in a Mastodon client. Crafted using SwiftUI technology exclusively, this application boasts impressive speed, minimal resource consumption, as well as user-friendly functionality. It features an intuitive design framework on iOS devices like iPhone/iPad and MacOS systems.

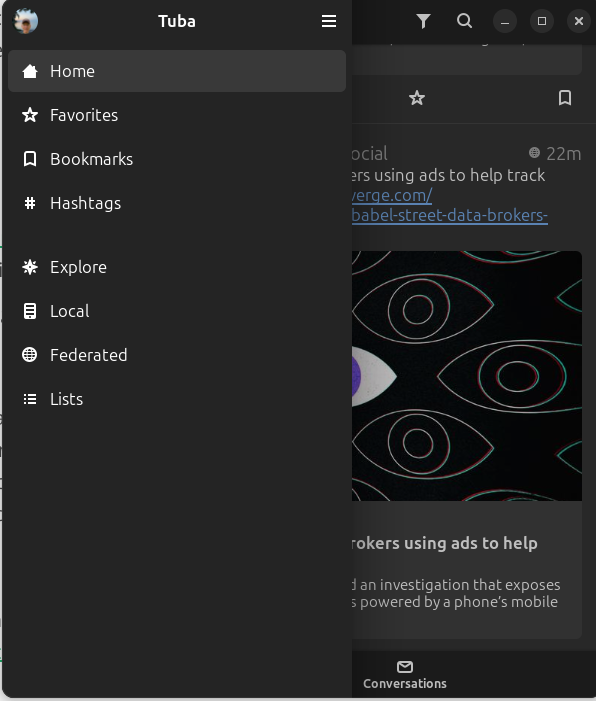

My favorite desktop Linux app for Mastodon is Tuba. It is available as a Flatpak. It’s intuitive and easy to use. Tuba is open source with a GPL v3 license.

How is Mastodon changing your reading habits? What are your favorite apps? Be sure to comment.